Scaling HTTP Azure Functions

Wednesday, February 15, 2017

One of the promises of serverless computing is automatic scaling based on load. I decided to run some HTTP load tests on Azure Functions to see how well it scales. In particular, how many instances does the function app scale out to? How does load affect response times and throughput? How stable is Azure Functions as it scales out?

Before we begin, I want to point out that this is by no means a scientific test. Take the results with a giant grain of salt.

Azure Functions and App Service Consumption Plans

Azure Functions basically runs on App Service infrastructure with some differences.

Instead of a pool of dedicated instances like a Basic or Standard App Service Plan, Azure Functions on a Consumption Plan runs on a pool of instances shared with other Azure Function Apps.

Another way that a Consumption Plan differs from a Basic/Standard Plan is that a Function App on a Consumption Plan stores its files on a file share in a separate Storage account. It needs to do this because the files need to be dynamically mounted onto new App Service instances as the app scales out.

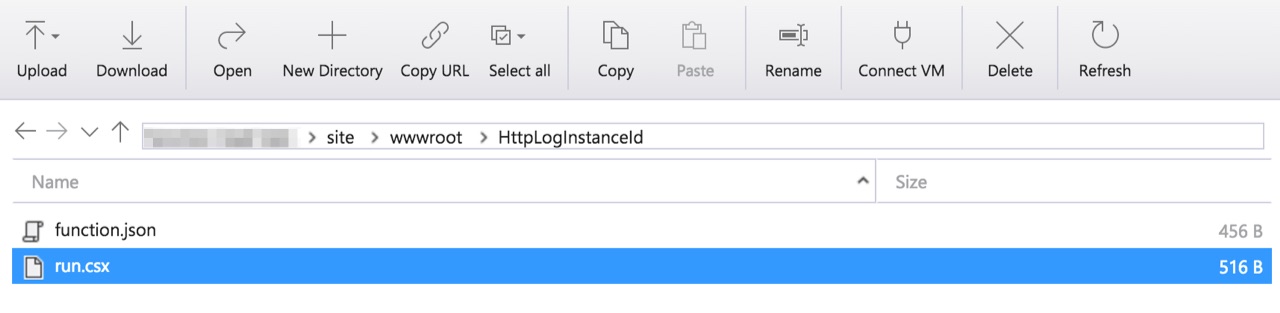

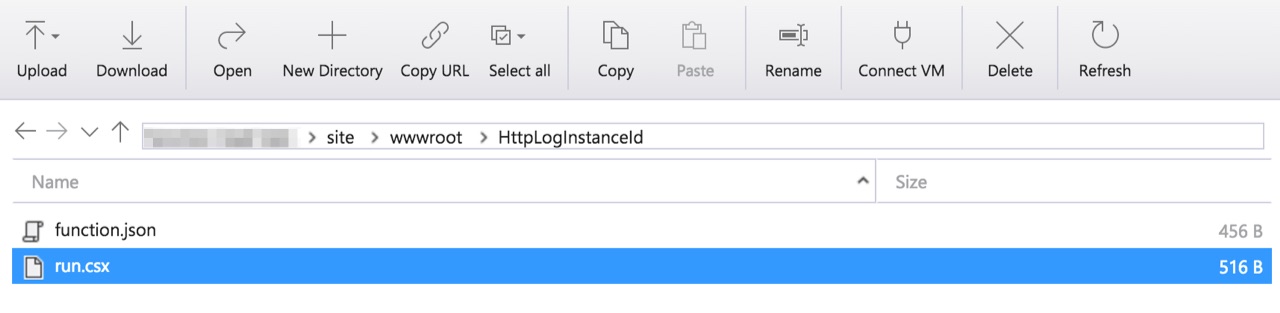

If we use Storage Explorer to look at the file shares in the storage account that was created alongside the Function App, we'll see our function's files:

App Service instance ids

Because we're running on Azure App Service, we have access to information about the environment via Kudu environment variables. One interesting variable is WEBSITE_INSTANCE_ID, which is the id of the App Service instance that the Function App is executing on. If we log the instance id on every function execution, we can use the number of distinct instance id's to tell us how many instance our app is scaled to.

Here's a function that does exactly this. The function has HTTP input and output bindings, as well as an output binding to log our instance id to Table Storage. This is the function.json:

{

"bindings": [

{

"authLevel": "anonymous",

"name": "req",

"type": "httpTrigger",

"direction": "in"

},

{

"name": "$return",

"type": "http",

"direction": "out"

},

{

"type": "table",

"name": "instanceIds",

"tableName": "HttpLoadTestInstanceIds",

"connection": "StorageConnectionString",

"direction": "out"

}

],

"disabled": false

}

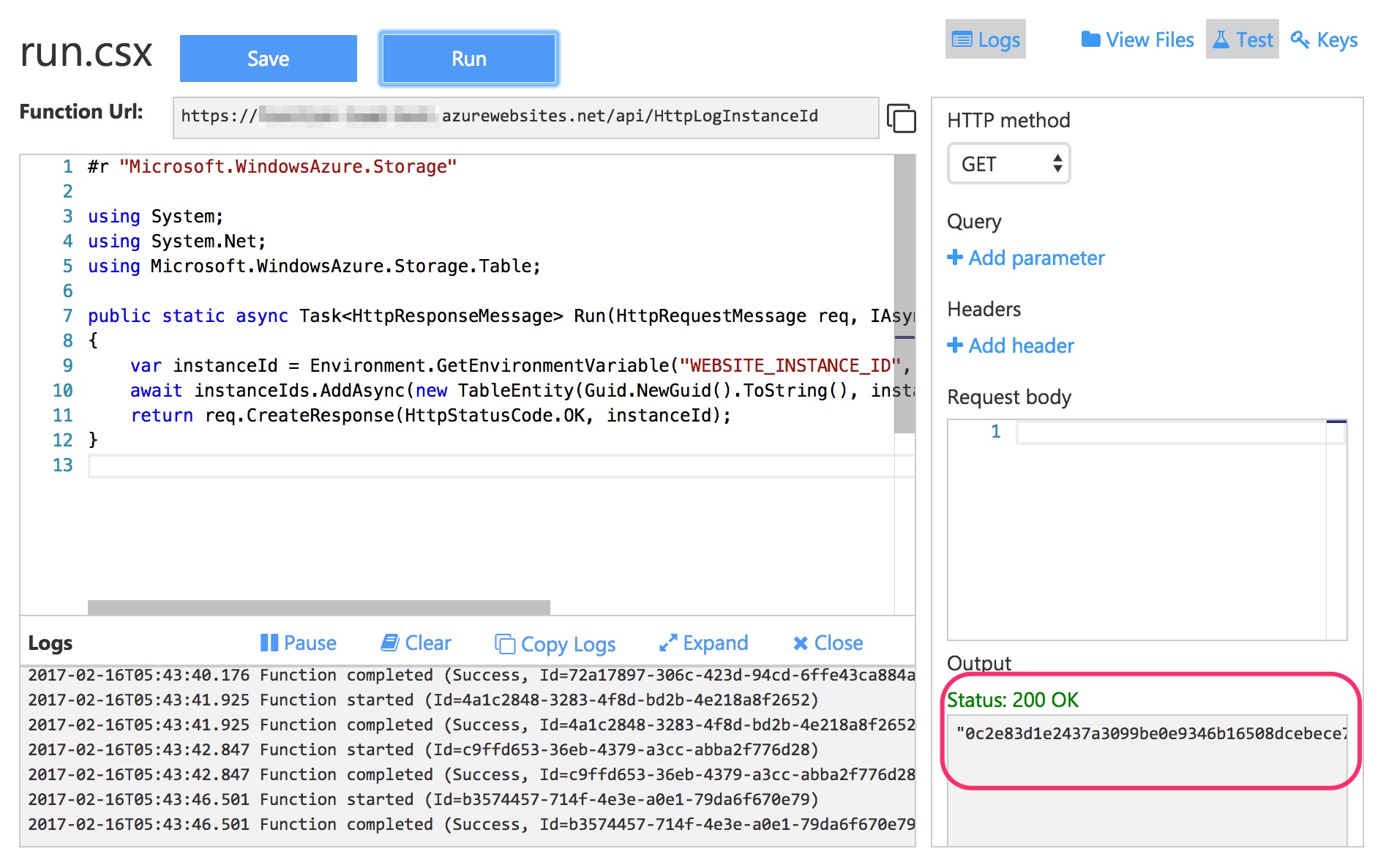

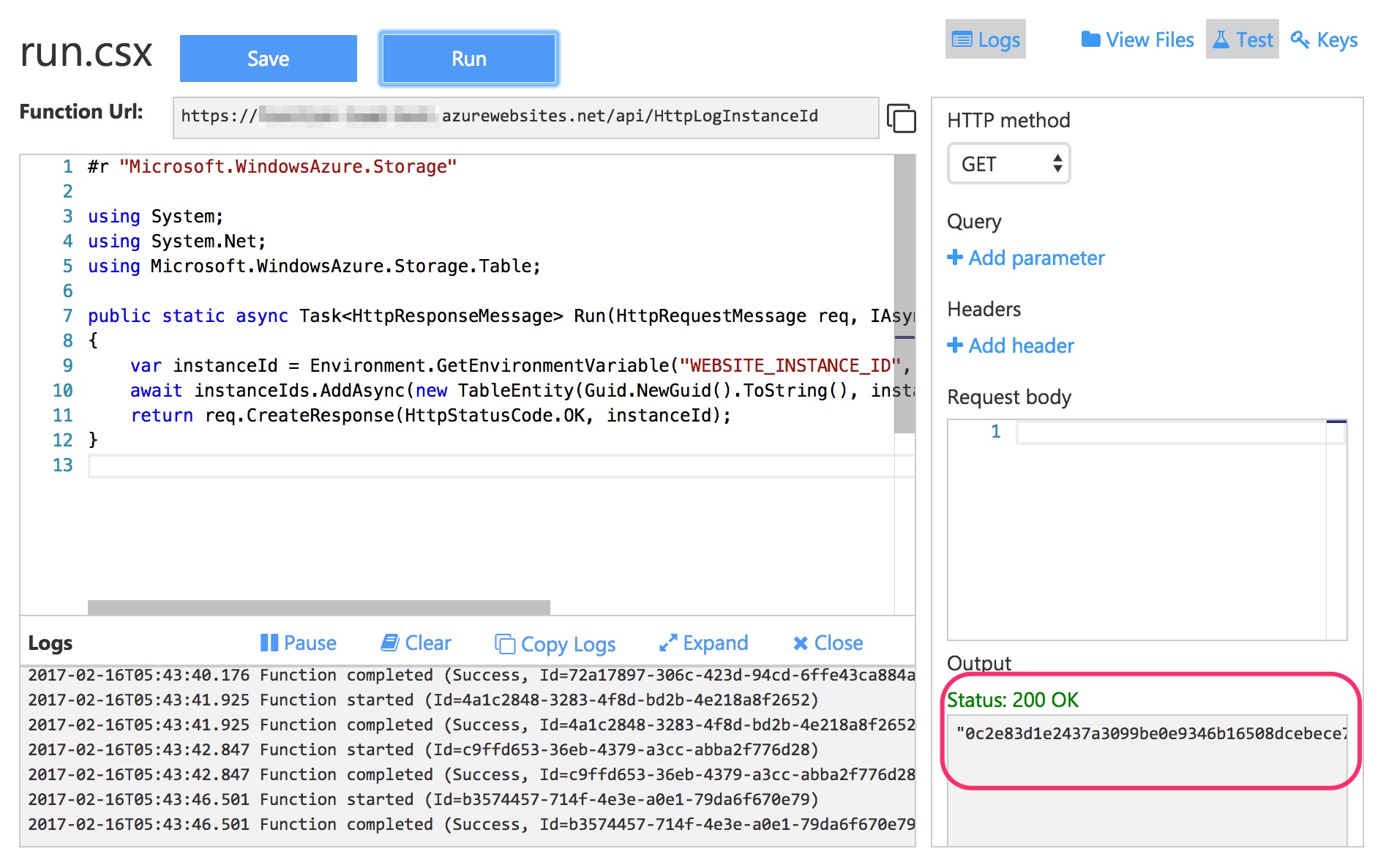

And here's the function itself. Very simple:

#r "Microsoft.WindowsAzure.Storage"

using System;

using System.Net;

using Microsoft.WindowsAzure.Storage.Table;

public static async Task<HttpResponseMessage> Run(

HttpRequestMessage req, IAsyncCollector<TableEntity> instanceIds, TraceWriter log)

{

var instanceId =

Environment.GetEnvironmentVariable(

"WEBSITE_INSTANCE_ID",

EnvironmentVariableTarget.Process);

await instanceIds.AddAsync(new TableEntity(Guid.NewGuid().ToString(), instanceId));

return req.CreateResponse(HttpStatusCode.OK, instanceId);

}

If we test out the function, we should see that it consistently logs the same instance id. This tells us our Function app is only running on one instance.

Running a load test

Visual Studio Team Services includes an easy way to set up and run HTTP load tests. I defined a simple load test that ramps up to 500 concurrent users and executes for 20 minutes against our function above.

The results were pretty good. Here are the averages:

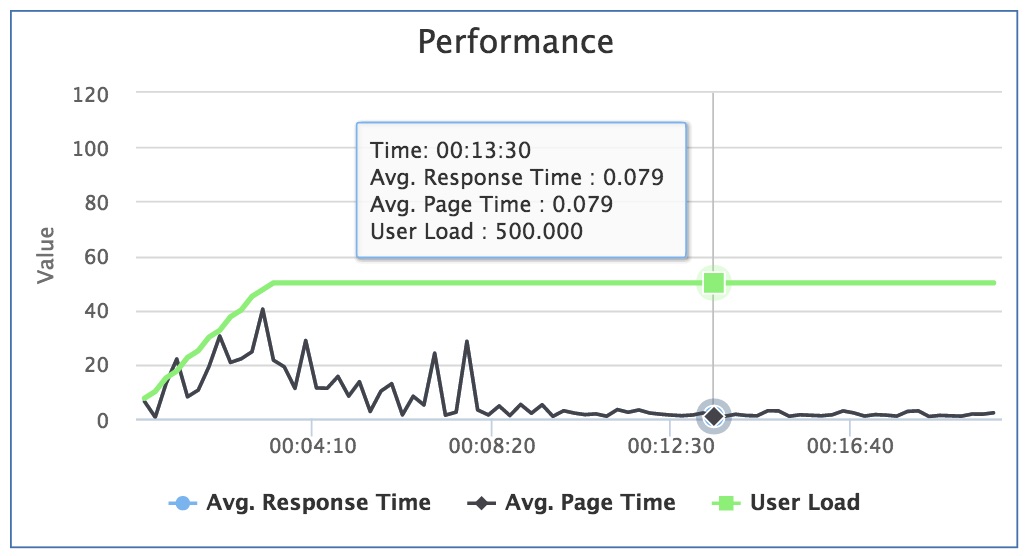

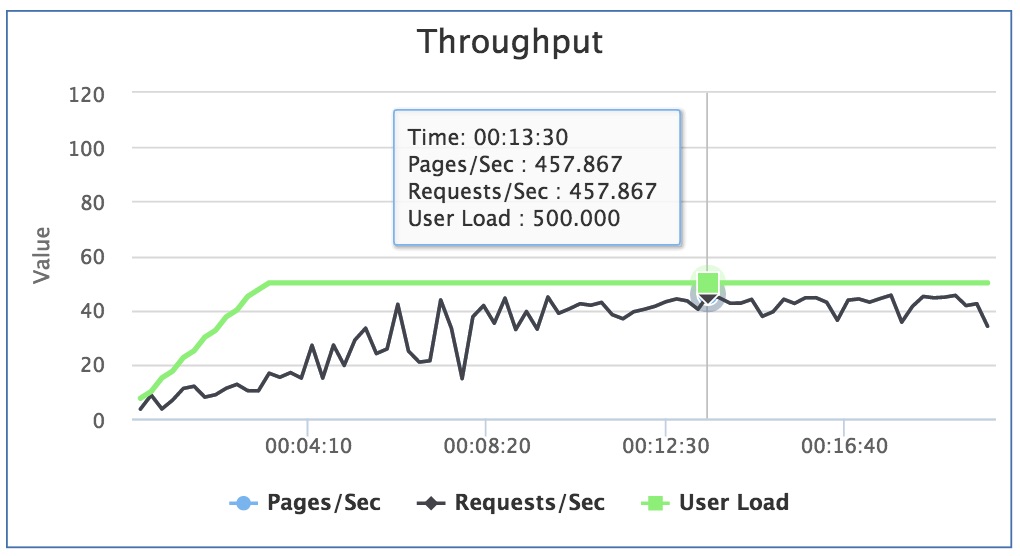

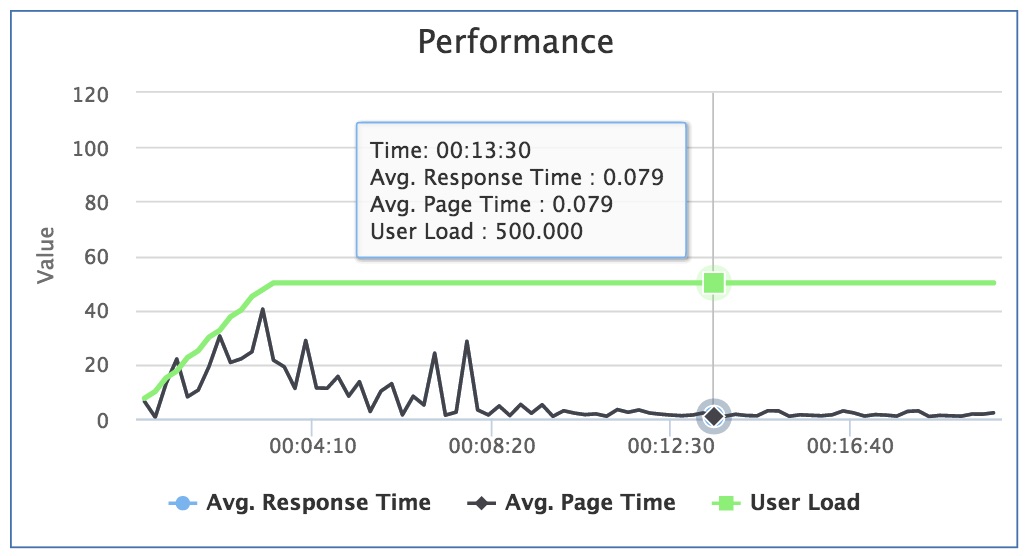

The charts provide us more insight into what's happening over the course of the load test.

Initially, the response times were spiking as high as 4 seconds in the early stages of the test as the Function App is still scaling out in response to the load. But after about the 8-minute mark, the app appears to have scaled out enough to handle this amount of traffic, and response times consistently stayed under 200 ms.

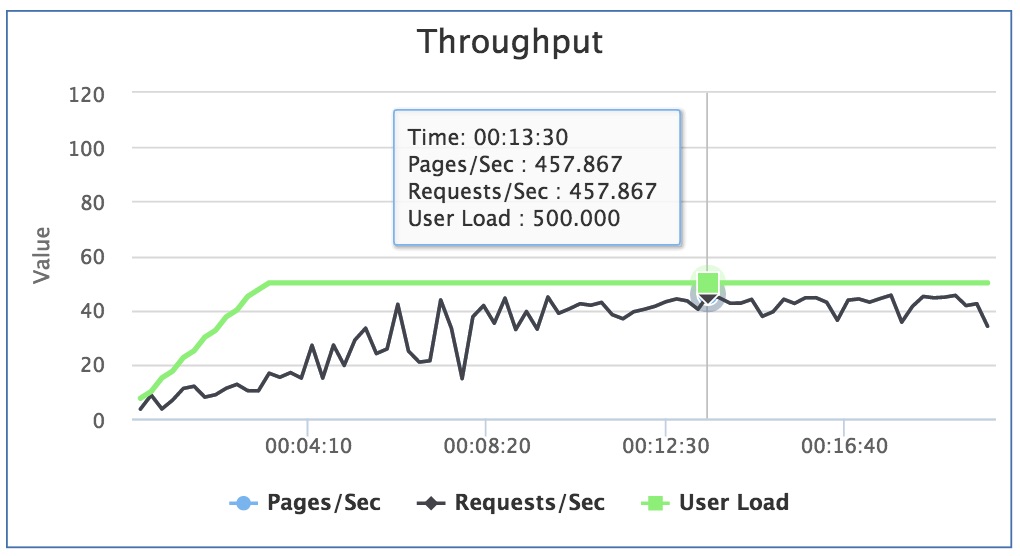

Taking a look at the throughput chart, we can see that after the 8-minute mark we are consistently getting over 400 requests per second. We hit a peak of 457 RPS.

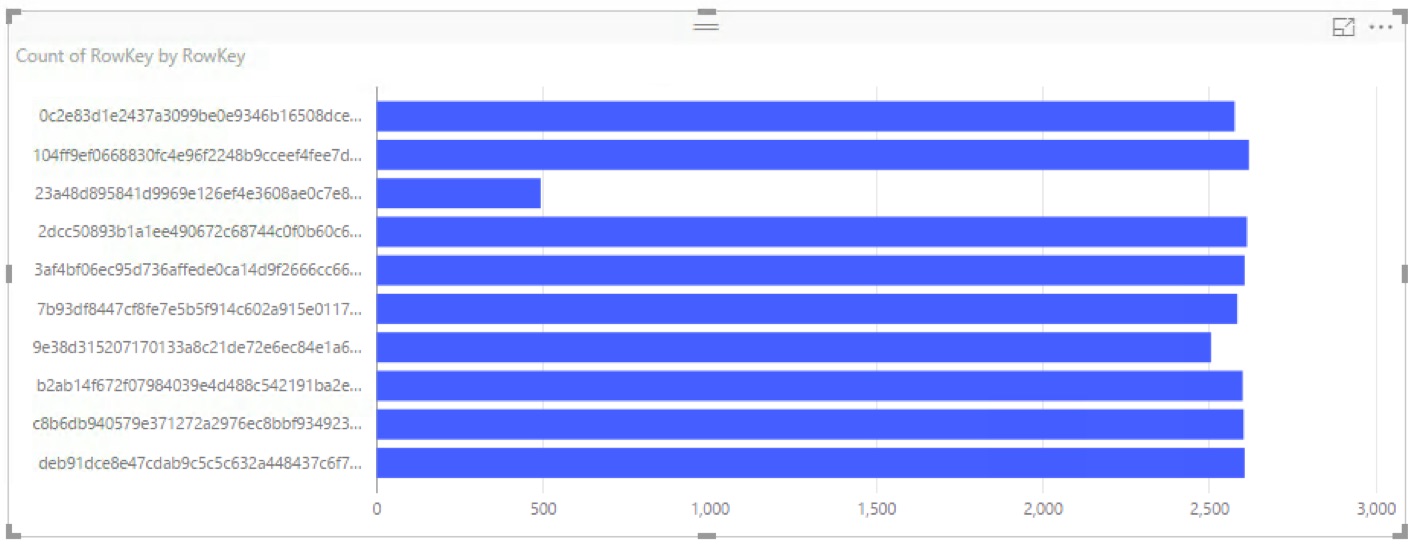

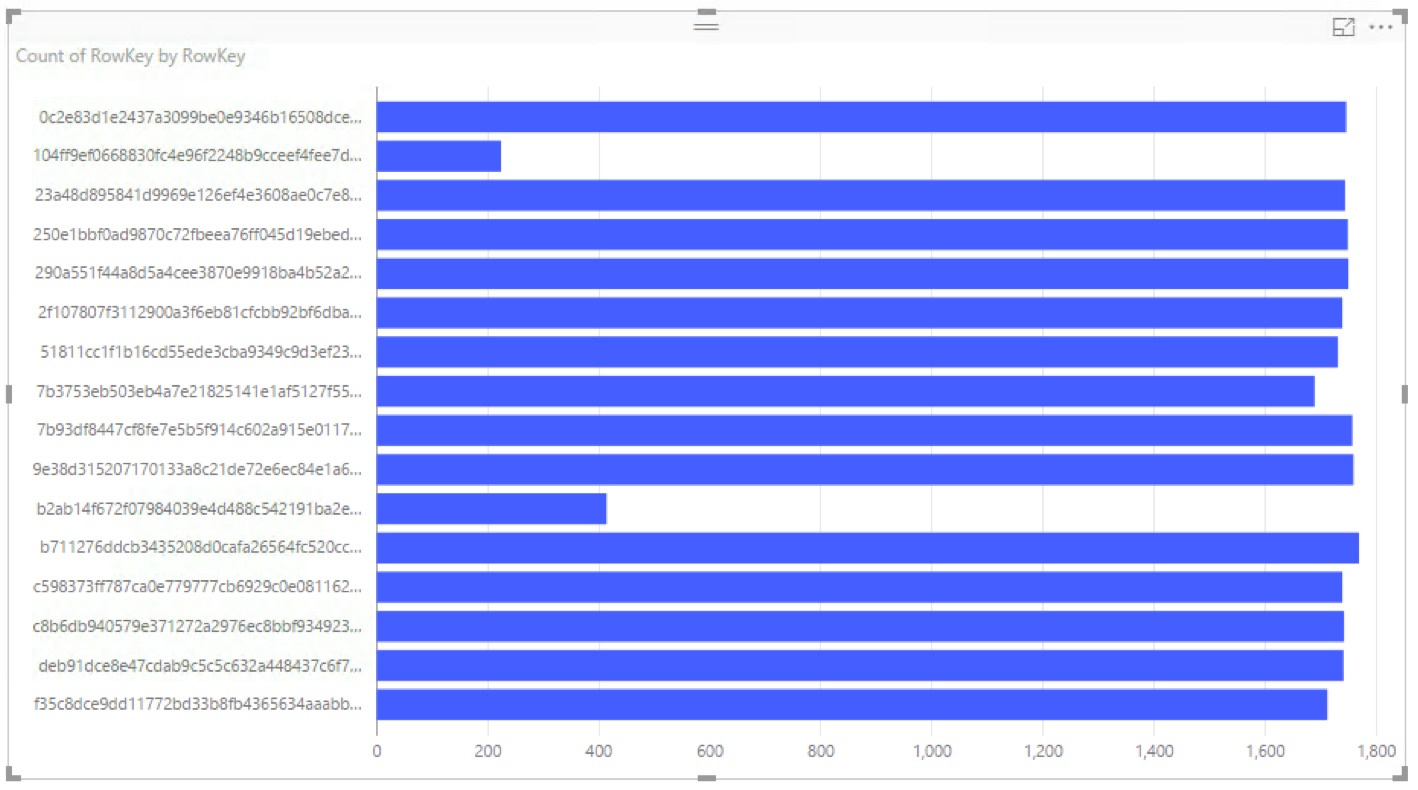

Function App scaling (500 concurrent users)

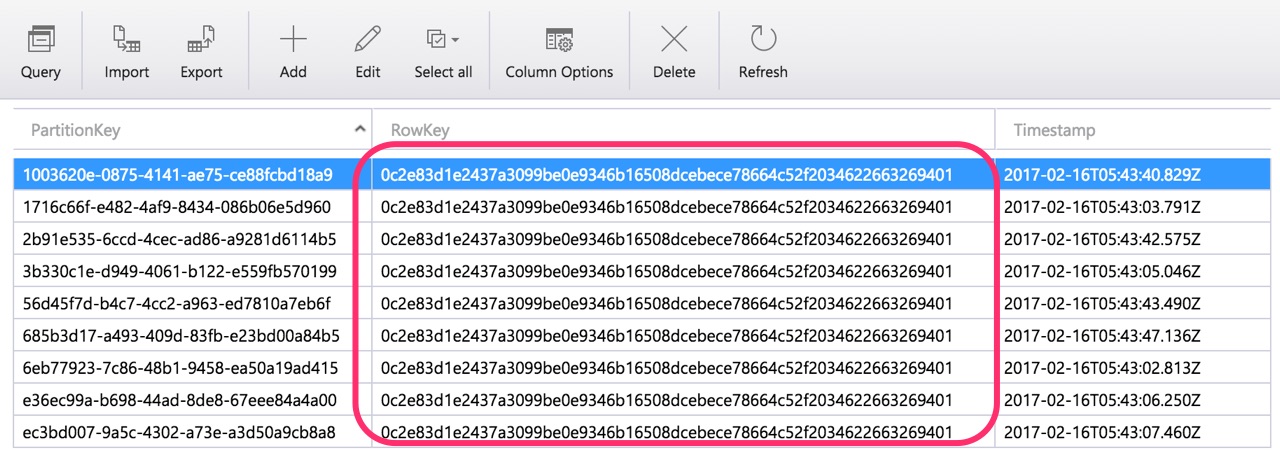

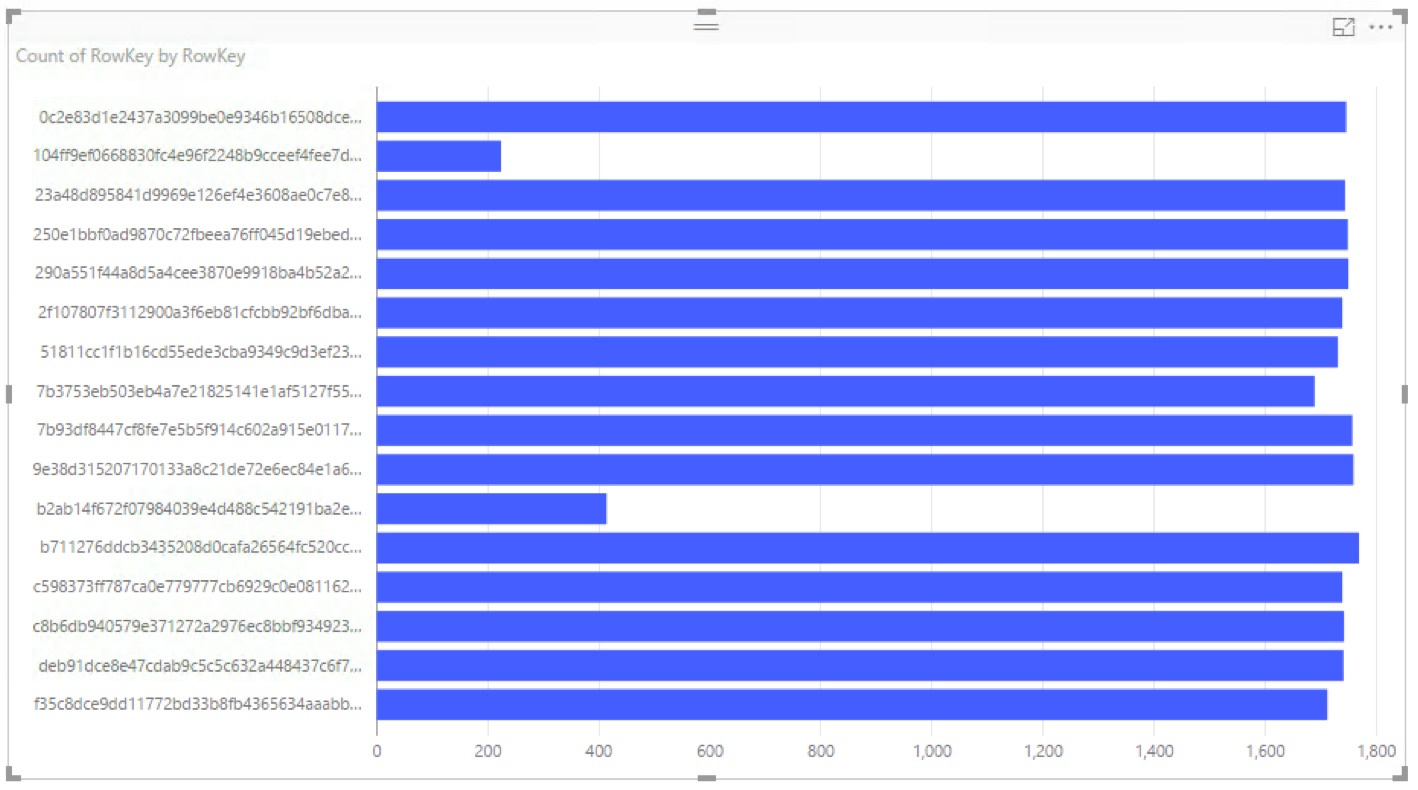

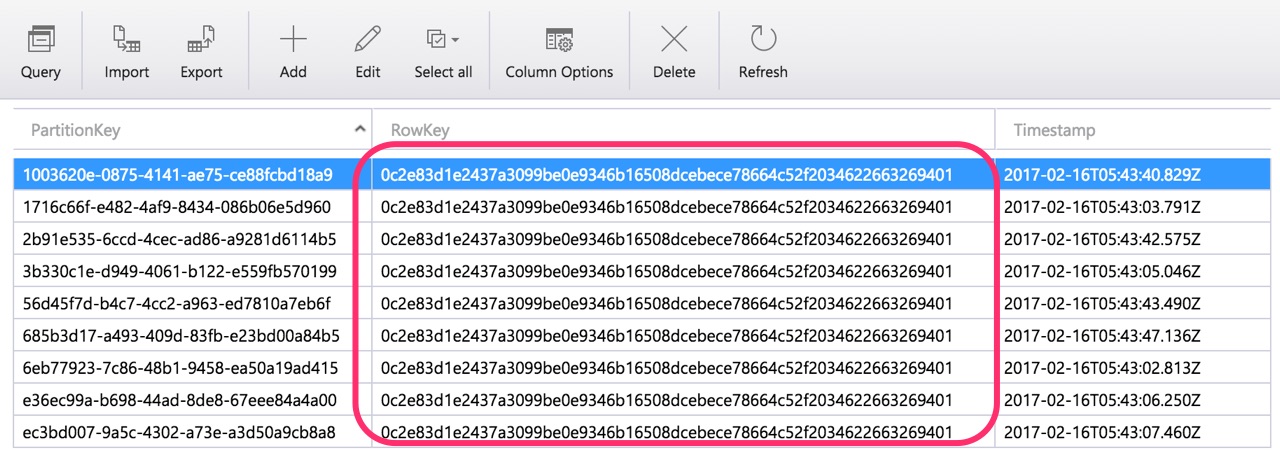

We can take a look at the number of instances the app scaled out to during the load test by analyzing the instance ids that were logged to Table Storage. To do this we can use Power BI Desktop.

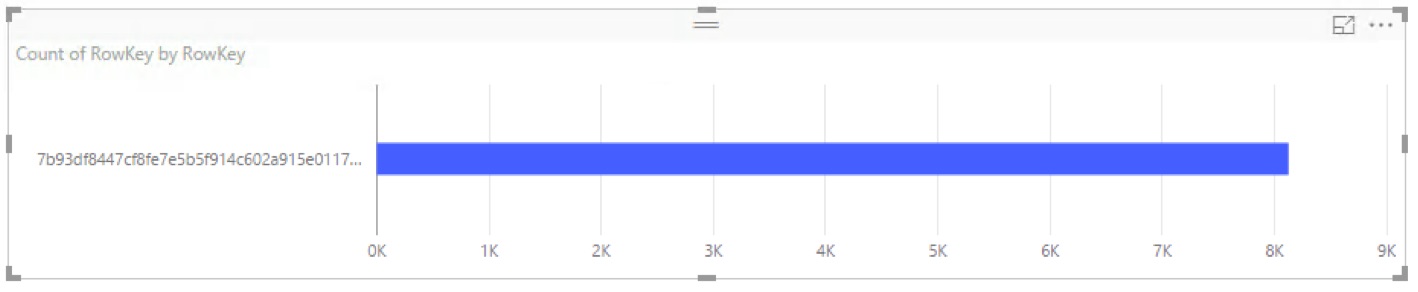

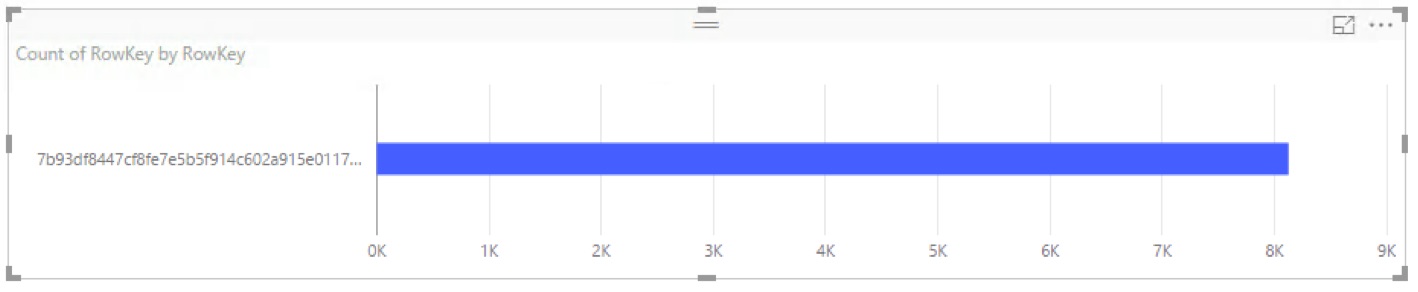

First 2 minutes

During the couple of minutes, the app was only running on one instance:

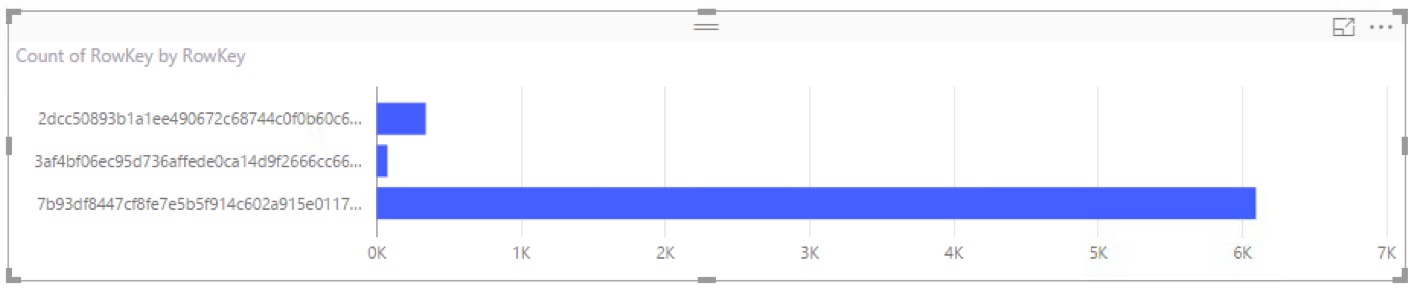

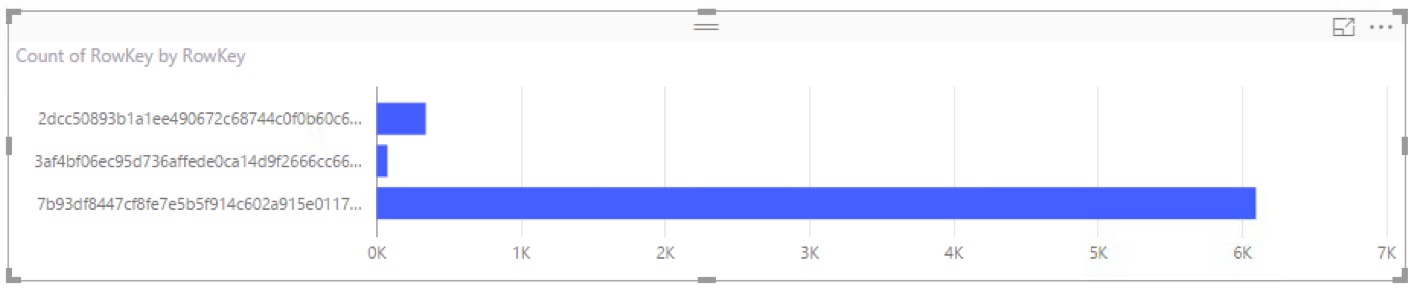

3 minutes

At around the 3-minute mark, we see a couple of new instance show up:

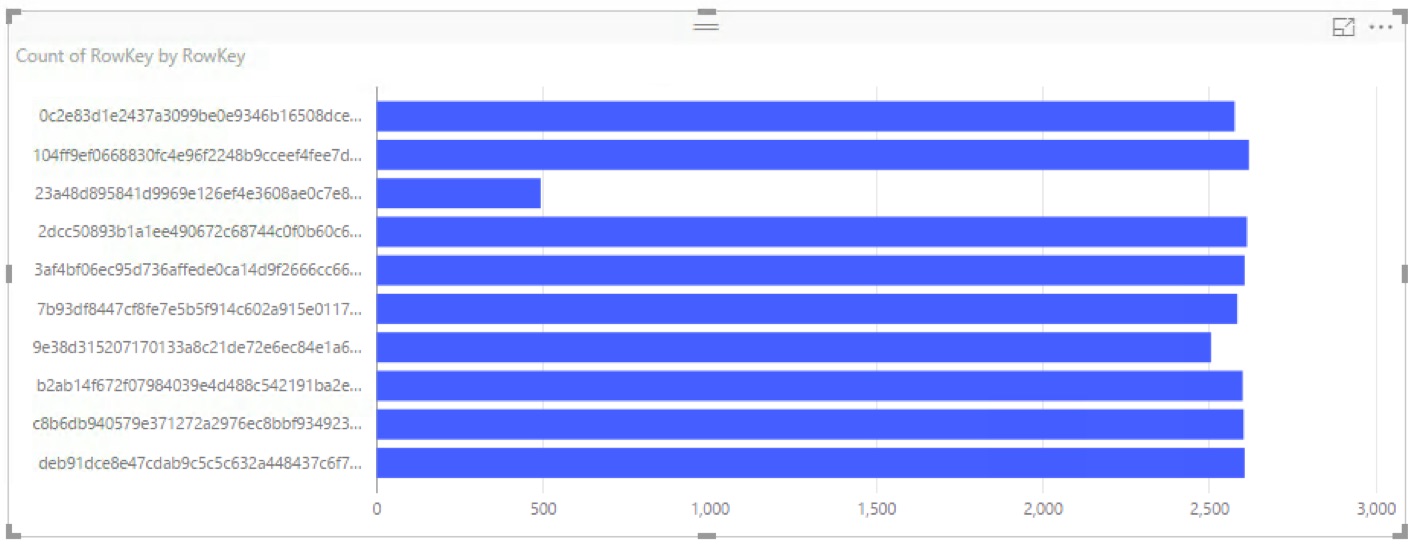

8 minutes

In the load test, the 8-minute mark was when we started seeing good, steady results. We can see at this point we're up to 10 instances:

19 minutes

By the time the test was almost over, we got up to 16 instances:

1000 concurrent users

Can a Function App scale beyond 16 instances? It looks like it. I doubled the concurrent users to 1000 and ran another test. This time it scaled out to 18 instances!

Can it go even higher? I'd love to run more tests but VSTS load tests aren't free. :)

Summary

At least from these tests, it looks like Azure Functions offers seemingly unlimited scale for HTTP triggered functions. In a future post, I'll take a look at scaling for other types of triggers.

One of the promises of serverless computing is automatic scaling based on load. I decided to run some HTTP load tests on Azure Functions to see how well it scales. In particular, how many instances does the function app scale out to? How does load affect response times and throughput? How stable is Azure Functions as it scales out?

Before we begin, I want to point out that this is by no means a scientific test. Take the results with a giant grain of salt.

Azure Functions and App Service Consumption Plans

Azure Functions basically runs on App Service infrastructure with some differences.

Instead of a pool of dedicated instances like a Basic or Standard App Service Plan, Azure Functions on a Consumption Plan runs on a pool of instances shared with other Azure Function Apps.

Another way that a Consumption Plan differs from a Basic/Standard Plan is that a Function App on a Consumption Plan stores its files on a file share in a separate Storage account. It needs to do this because the files need to be dynamically mounted onto new App Service instances as the app scales out.

If we use Storage Explorer to look at the file shares in the storage account that was created alongside the Function App, we'll see our function's files:

App Service instance ids

Because we're running on Azure App Service, we have access to information about the environment via Kudu environment variables. One interesting variable is WEBSITE_INSTANCE_ID, which is the id of the App Service instance that the Function App is executing on. If we log the instance id on every function execution, we can use the number of distinct instance id's to tell us how many instance our app is scaled to.

Here's a function that does exactly this. The function has HTTP input and output bindings, as well as an output binding to log our instance id to Table Storage. This is the function.json:

{

"bindings": [

{

"authLevel": "anonymous",

"name": "req",

"type": "httpTrigger",

"direction": "in"

},

{

"name": "$return",

"type": "http",

"direction": "out"

},

{

"type": "table",

"name": "instanceIds",

"tableName": "HttpLoadTestInstanceIds",

"connection": "StorageConnectionString",

"direction": "out"

}

],

"disabled": false

}

And here's the function itself. Very simple:

#r "Microsoft.WindowsAzure.Storage"

using System;

using System.Net;

using Microsoft.WindowsAzure.Storage.Table;

public static async Task<HttpResponseMessage> Run(

HttpRequestMessage req, IAsyncCollector<TableEntity> instanceIds, TraceWriter log)

{

var instanceId =

Environment.GetEnvironmentVariable(

"WEBSITE_INSTANCE_ID",

EnvironmentVariableTarget.Process);

await instanceIds.AddAsync(new TableEntity(Guid.NewGuid().ToString(), instanceId));

return req.CreateResponse(HttpStatusCode.OK, instanceId);

}

If we test out the function, we should see that it consistently logs the same instance id. This tells us our Function app is only running on one instance.

Running a load test

Visual Studio Team Services includes an easy way to set up and run HTTP load tests. I defined a simple load test that ramps up to 500 concurrent users and executes for 20 minutes against our function above.

The results were pretty good. Here are the averages:

The charts provide us more insight into what's happening over the course of the load test.

Initially, the response times were spiking as high as 4 seconds in the early stages of the test as the Function App is still scaling out in response to the load. But after about the 8-minute mark, the app appears to have scaled out enough to handle this amount of traffic, and response times consistently stayed under 200 ms.

Taking a look at the throughput chart, we can see that after the 8-minute mark we are consistently getting over 400 requests per second. We hit a peak of 457 RPS.

Function App scaling (500 concurrent users)

We can take a look at the number of instances the app scaled out to during the load test by analyzing the instance ids that were logged to Table Storage. To do this we can use Power BI Desktop.

First 2 minutes

During the couple of minutes, the app was only running on one instance:

3 minutes

At around the 3-minute mark, we see a couple of new instance show up:

8 minutes

In the load test, the 8-minute mark was when we started seeing good, steady results. We can see at this point we're up to 10 instances:

19 minutes

By the time the test was almost over, we got up to 16 instances:

1000 concurrent users

Can a Function App scale beyond 16 instances? It looks like it. I doubled the concurrent users to 1000 and ran another test. This time it scaled out to 18 instances!

Can it go even higher? I'd love to run more tests but VSTS load tests aren't free. :)

Summary

At least from these tests, it looks like Azure Functions offers seemingly unlimited scale for HTTP triggered functions. In a future post, I'll take a look at scaling for other types of triggers.