Process Azure API Management Logs with Data Lake Analytics and U-SQL

Sunday, February 12, 2017

Azure API Management comes with a rich set of built-in analytics to gain insight into the APIs' activities, such as usage by user, APIs, operations, and geography. But there are times when we want to perform custom queries over the activities. For this, API Management allows us to log API activities to Event Hubs.

Once the logs are flowing through Event Hubs, there are many ways we can use the data. For instance, we can save the logs into a Table Storage using Azure Functions and query it with Power BI; or we can process the live stream using Stream Analytics.

Today, we'll look at how to use Event Hubs Archive to automatically save the logs into Blob Storage. And we'll query the logs from Blob Storage using Data Lake Analytics.

I've already written an article with details on how to turn on Event Hubs Archive and query it using U-SQL with Data Lake Analytics. I won't repeat some of those details; instead I'll focus on how to use these tools with Azure API Management.

API Management Log to Event Hubs Policy

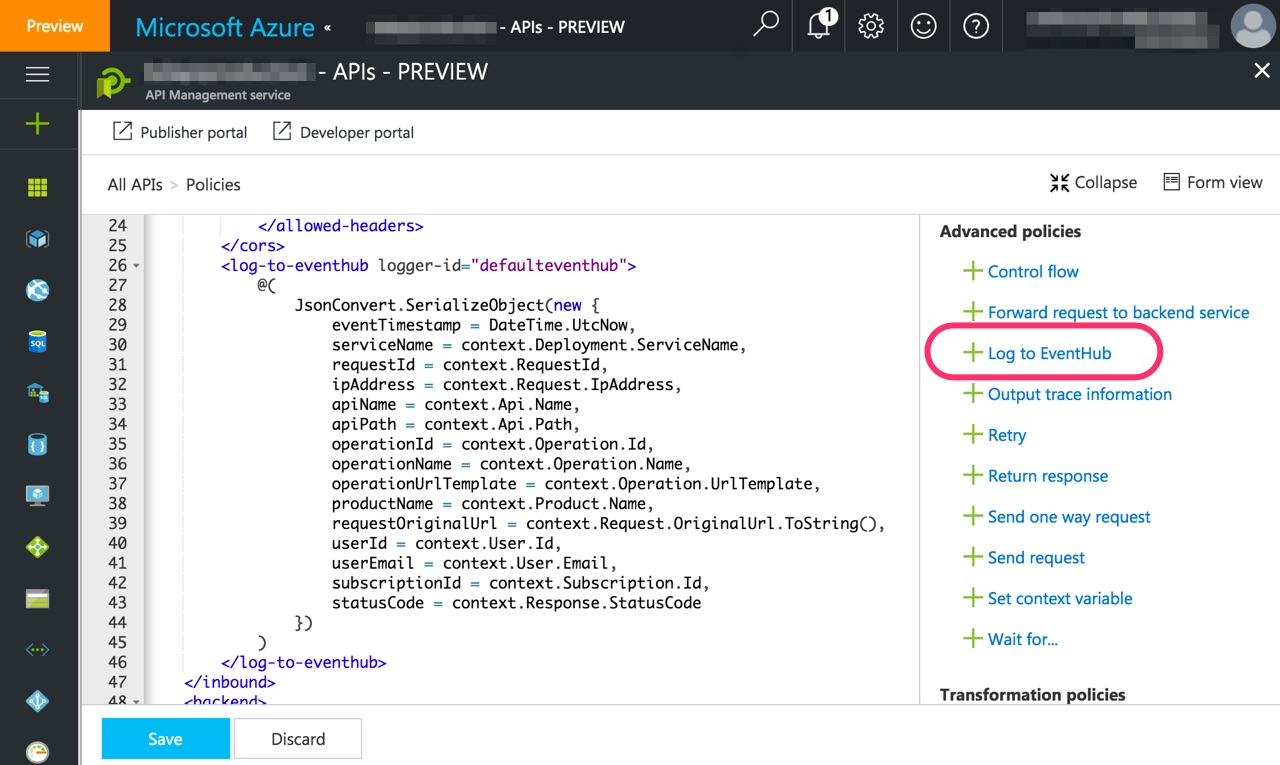

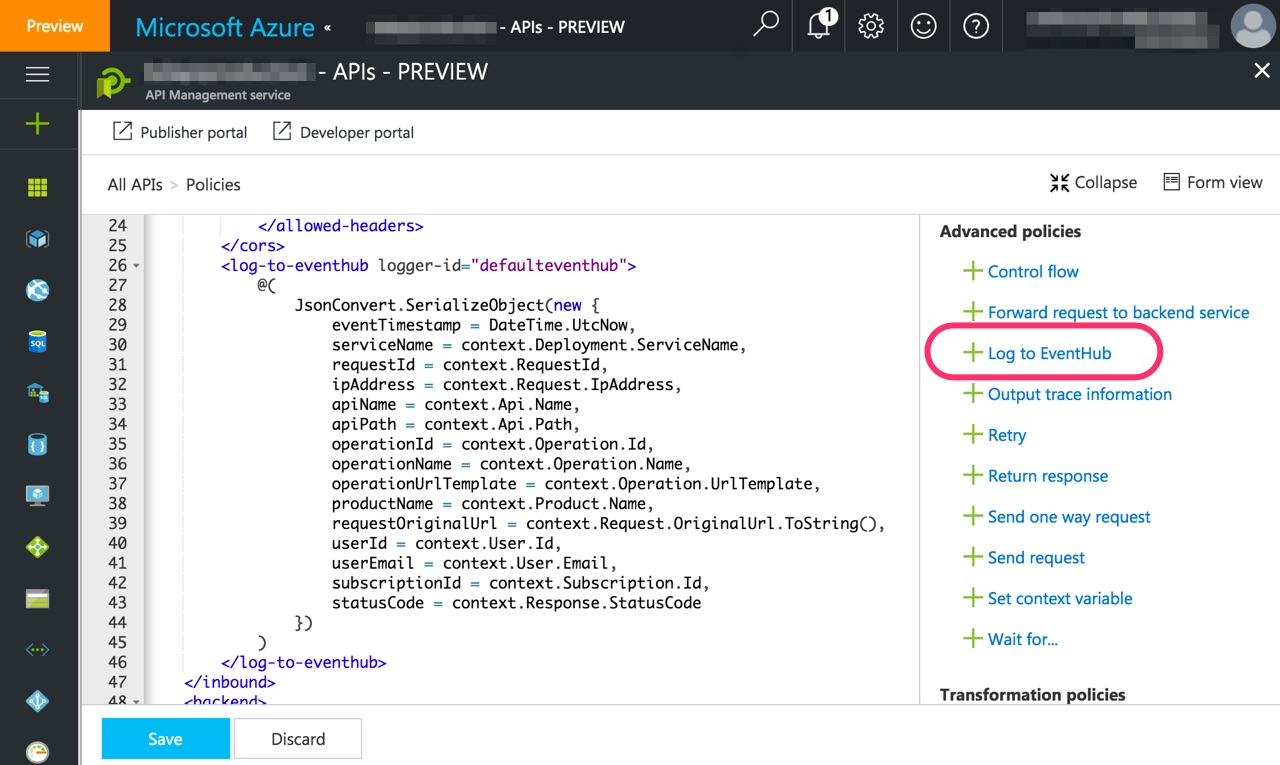

To log API activities to Event Hubs from API Management, we'll need to set up a Log to Event Hubs policy.

The first thing we need to do is to create an Event Hub and a logger in our API Management instance. Currently we can only create a logger with the API Management management REST API. Once the logger is set up, we'll set up a Log to Event Hubs policy. The full details on how to do this can be found in their documentation.

Here's an example of my policy where I log some information out as JSON:

Event Hubs Archive

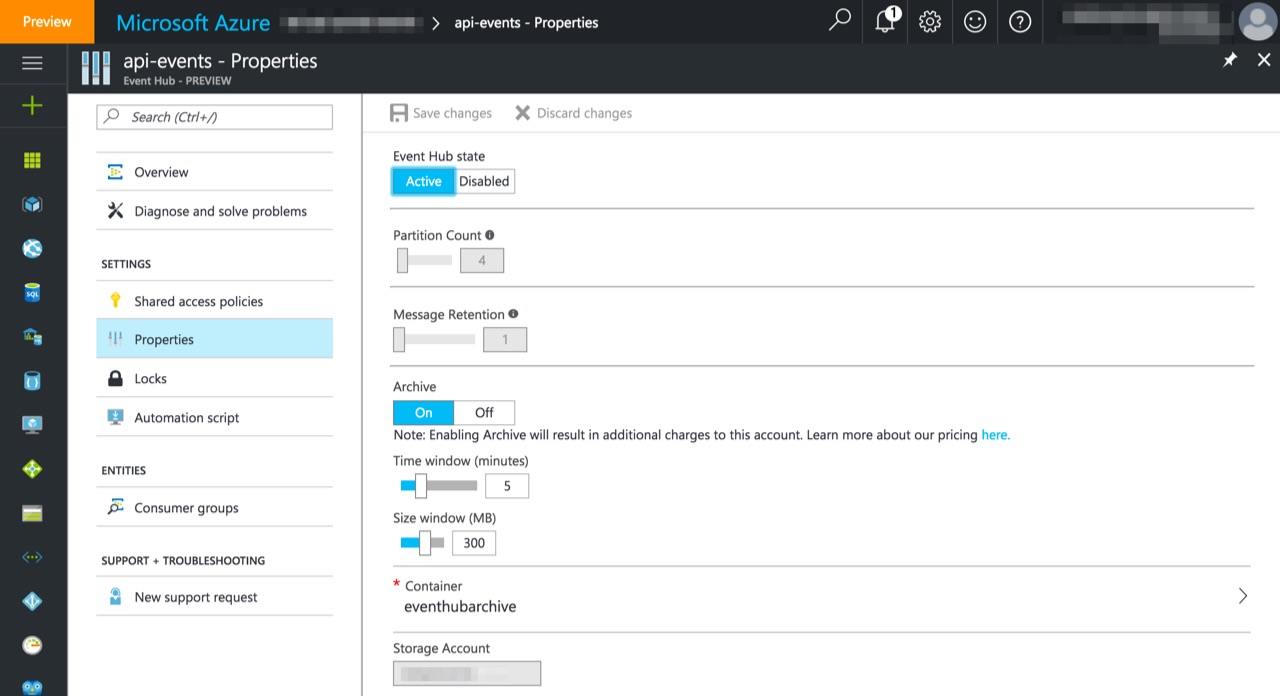

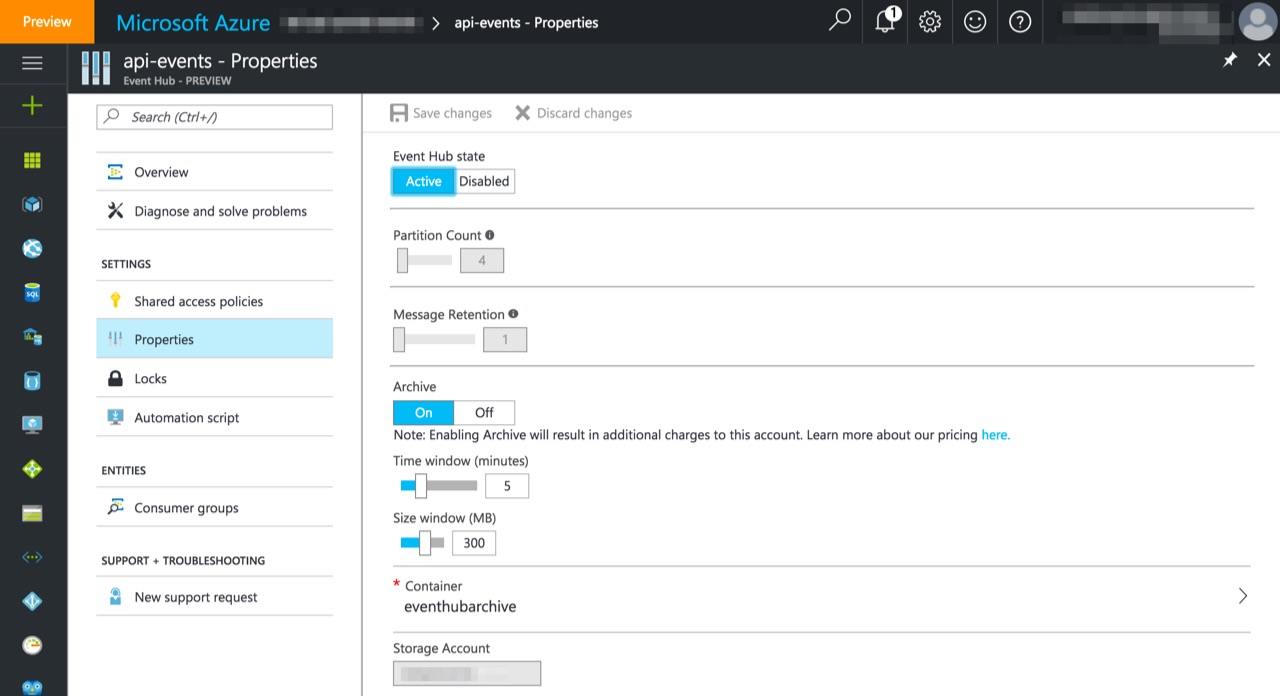

Event Hubs Archive allows us to automatically persist messages in an Event Hub into Blob Storage. We can turn it on in the Properties blade of the Event Hub.

For more information on how to set that up, check out my previous article.

Data Lake Analytics

Event Hubs Archive saves messages in Avro format. To set up Data Lake Analytics to extract Avro and to connect to the Storage account, once again refer to my previous article.

After Data Lake Analytics is set up, we can now write some U-SQL jobs to process our API Management logs.

Here is a U-SQL job to count up how many times each product in a product catalog API is accessed in a day:

REFERENCE ASSEMBLY [Newtonsoft.Json];

REFERENCE ASSEMBLY [Microsoft.Hadoop.Avro];

REFERENCE ASSEMBLY [Microsoft.Analytics.Samples.Formats];

USING Microsoft.Analytics.Samples.Formats.Avro;

USING Microsoft.Analytics.Samples.Formats.Json;

USING System.Text;

USING USQLApplication1;

@eventHubArchiveRecords =

EXTRACT Body byte[],

date DateTime

FROM @"wasb://eventhubarchive@myapievents.blob.core.windows.net/my-eventhub/api-events/{*}/{date:yyyy}/{date:MM}/{date:dd}/{date:HH}/{date:mm}/{*}"

USING new AvroExtractor(@"

{

""type"":""record"",

""name"":""EventData"",

""namespace"":""Microsoft.ServiceBus.Messaging"",

""fields"":[

{""name"":""SequenceNumber"",""type"":""long""},

{""name"":""Offset"",""type"":""string""},

{""name"":""EnqueuedTimeUtc"",""type"":""string""},

{""name"":""SystemProperties"",""type"":{""type"":""map"",""values"":[""long"",""double"",""string"",""bytes""]}},

{""name"":""Properties"",""type"":{""type"":""map"",""values"":[""long"",""double"",""string"",""bytes""]}},

{""name"":""Body"",""type"":[""null"",""bytes""]}

]

}

");

@jsonLogs =

SELECT JsonFunctions.JsonTuple(Encoding.UTF8.GetString(Body), "..*") AS json

FROM @eventHubArchiveRecords

WHERE date >= new DateTime(2017, 2, 9) AND date < new DateTime(2017, 2, 10);

@productIds =

SELECT Udfs.GetProductIdFromUrl(json["requestOriginalUrl"]) AS productId

FROM @jsonLogs

WHERE json["apiName"] == "Product Catalog"

&& json["operationName"] == "Get Product";

@productCounts =

SELECT productId,

COUNT(*) AS count

FROM @productIds

WHERE productId != null

GROUP BY productId;

OUTPUT @productCounts

TO "/anthony/output/apievents-productcounts.csv"

ORDER BY count

USING Outputters.Csv();

We're using a C# user defined function to parse the product id from the API URL:

using System.Text.RegularExpressions;

namespace USQLApplication1

{

public static class Udfs

{

public static string GetProductIdFromUrl(string url)

{

var match = Regex.Match(url, @"/products/(\d{8})$");

return match.Success ? match.Groups[1].Value : null;

}

}

}

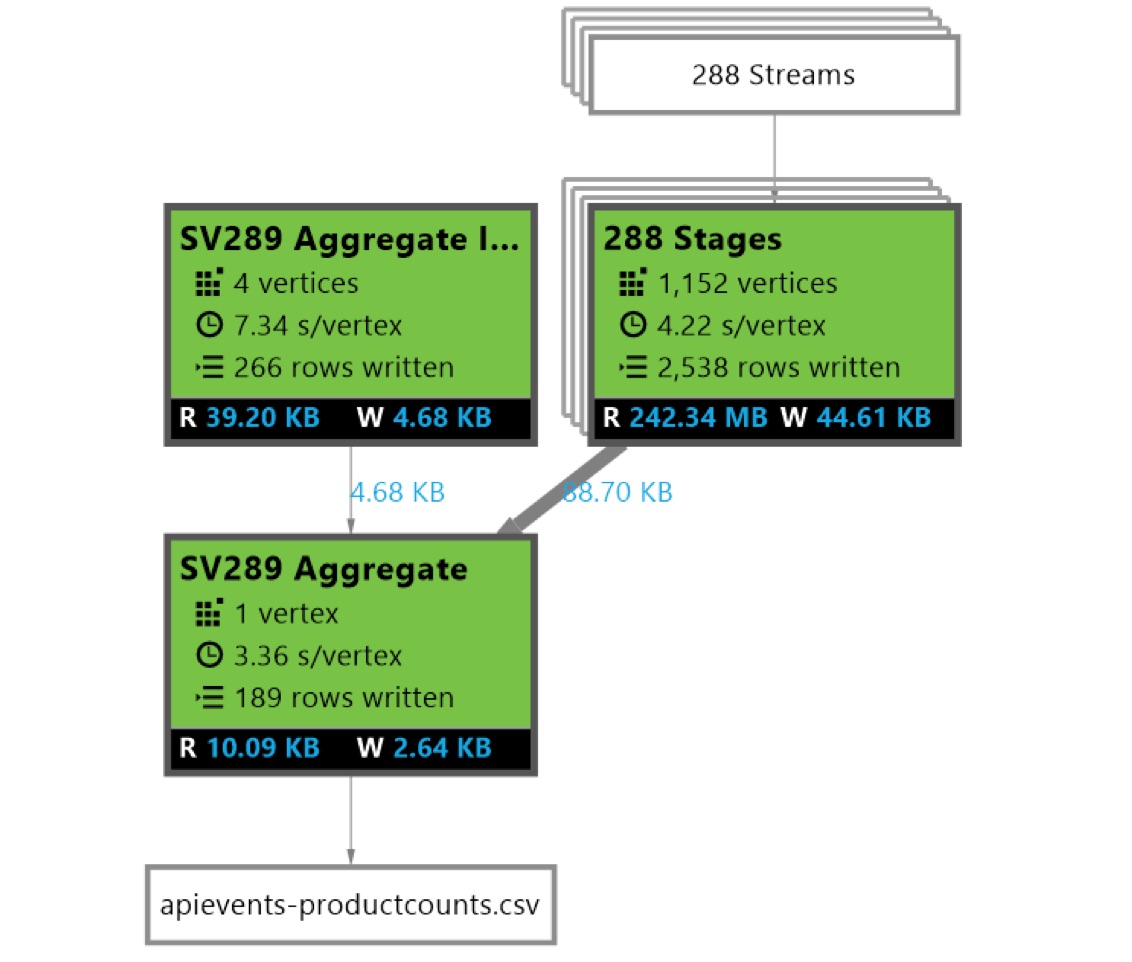

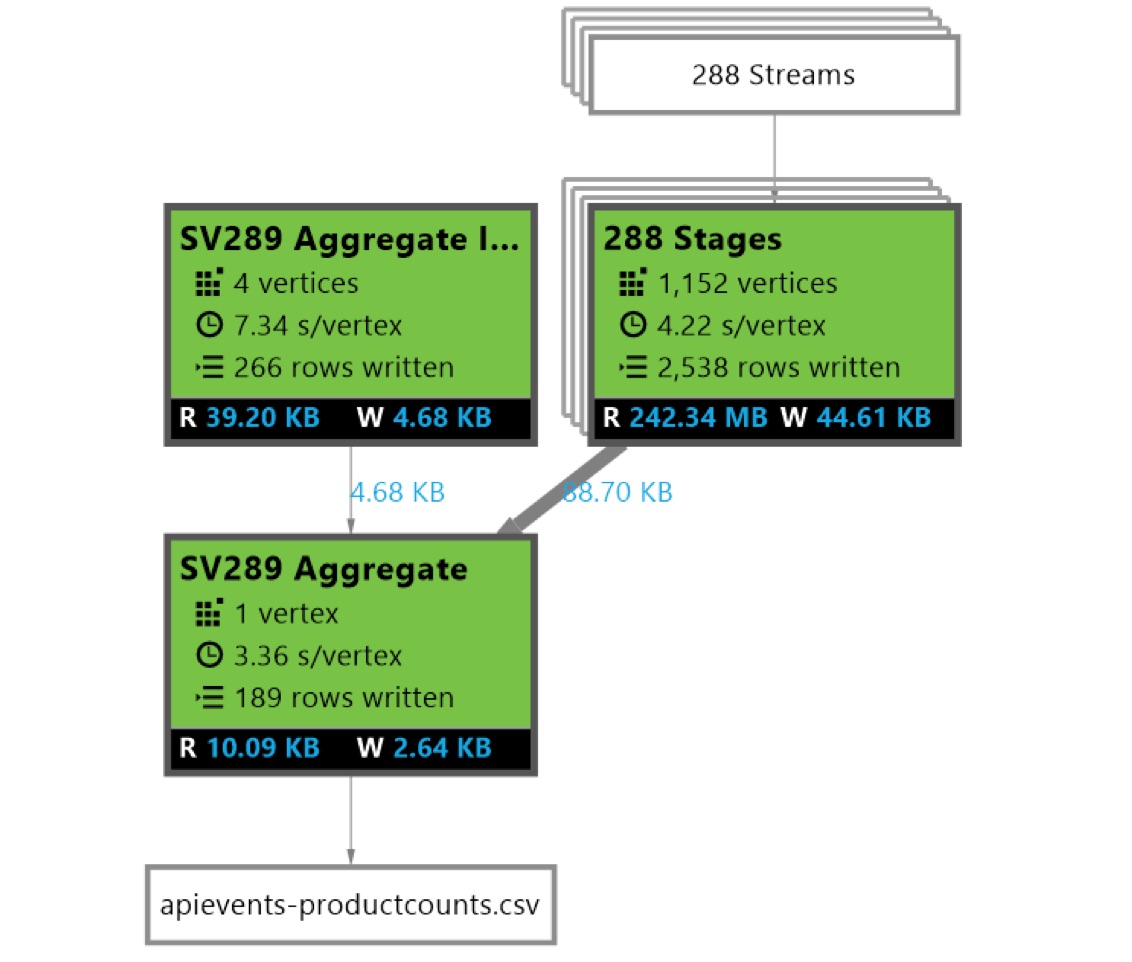

This job processed 1152 files in a few minutes:

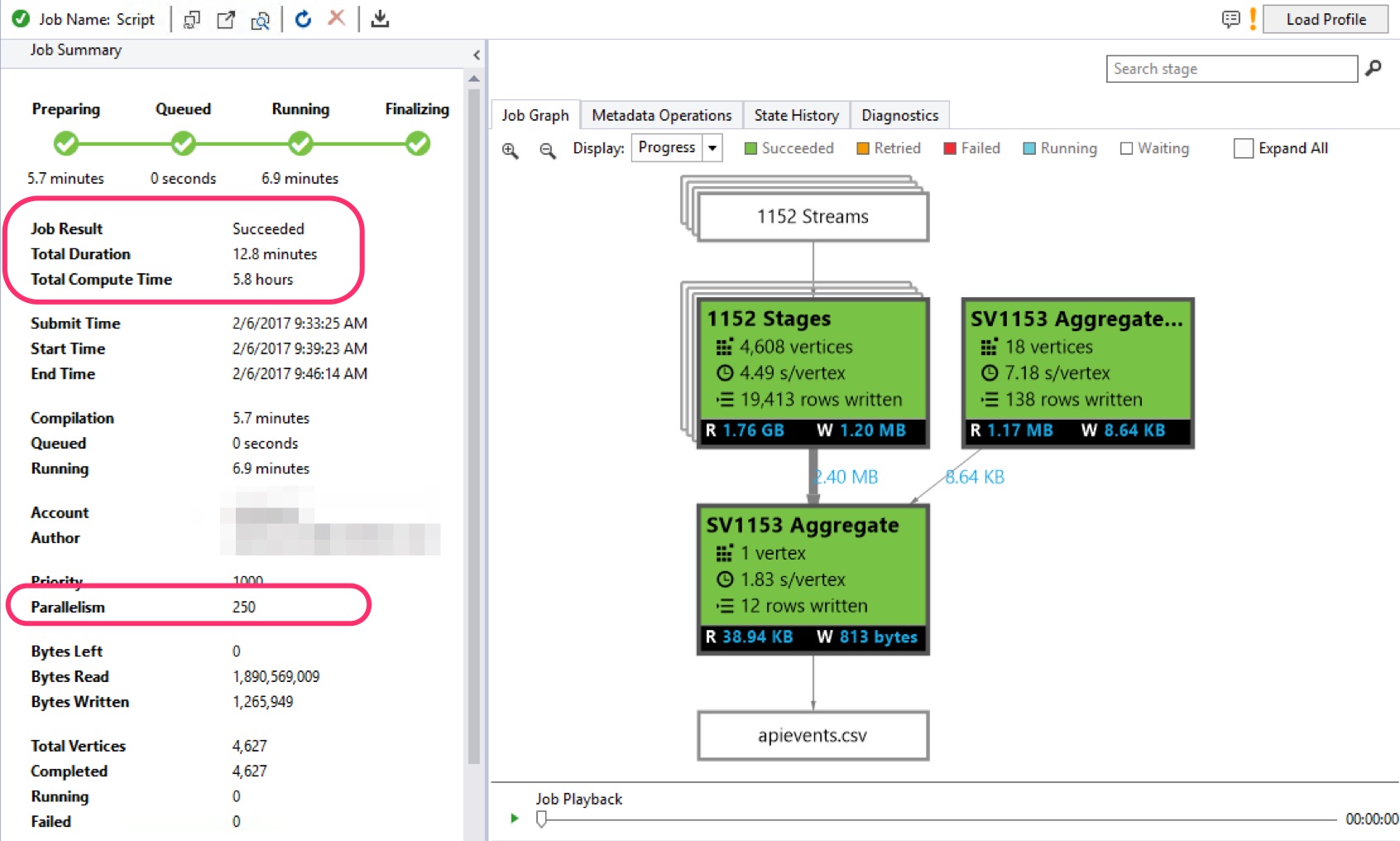

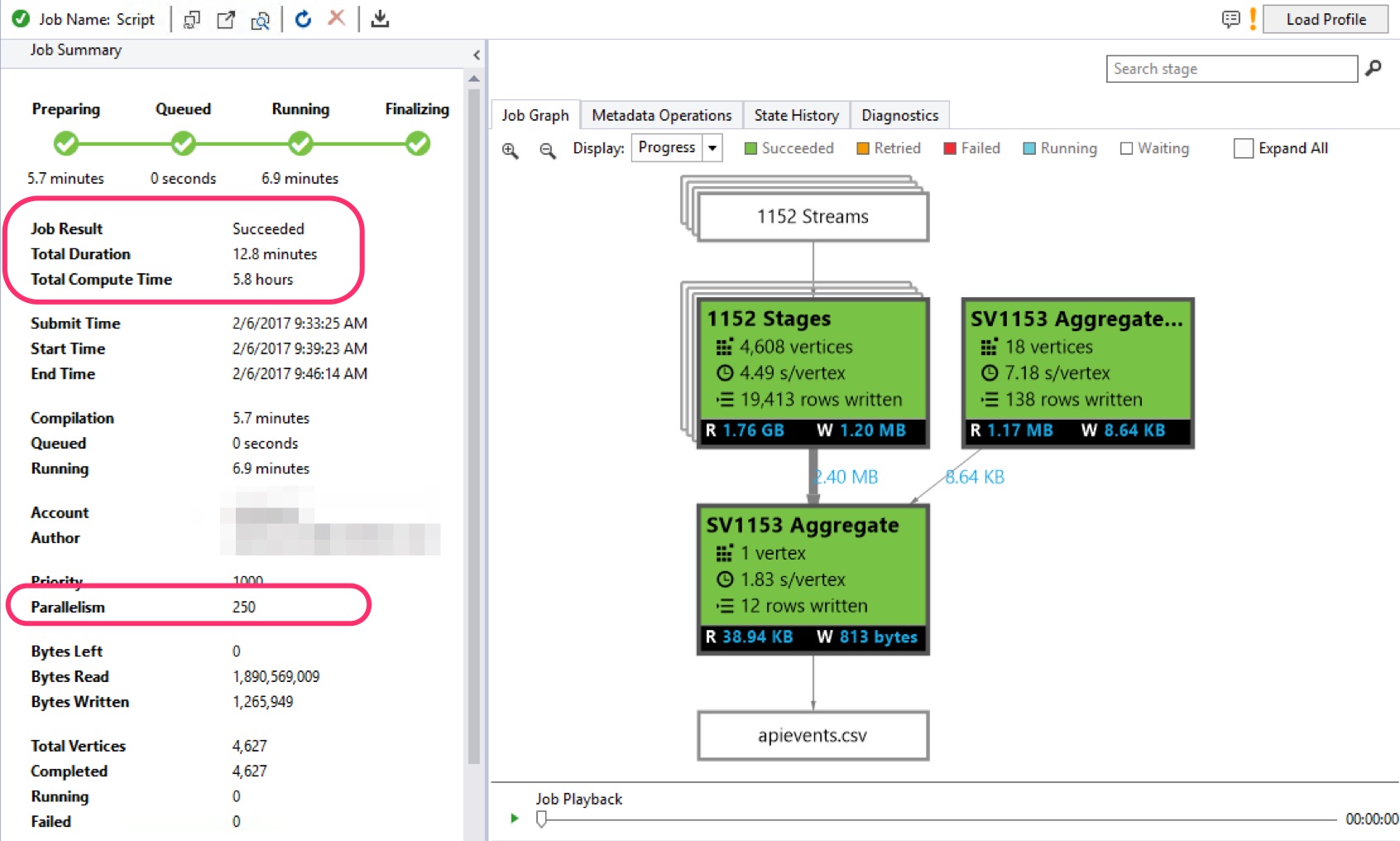

Here's another job that processed over 4600 larger files containing over 2 million log entries. It used 5.6 hours of compute time; but thanks to parallelism, it completed in under 13 minutes!

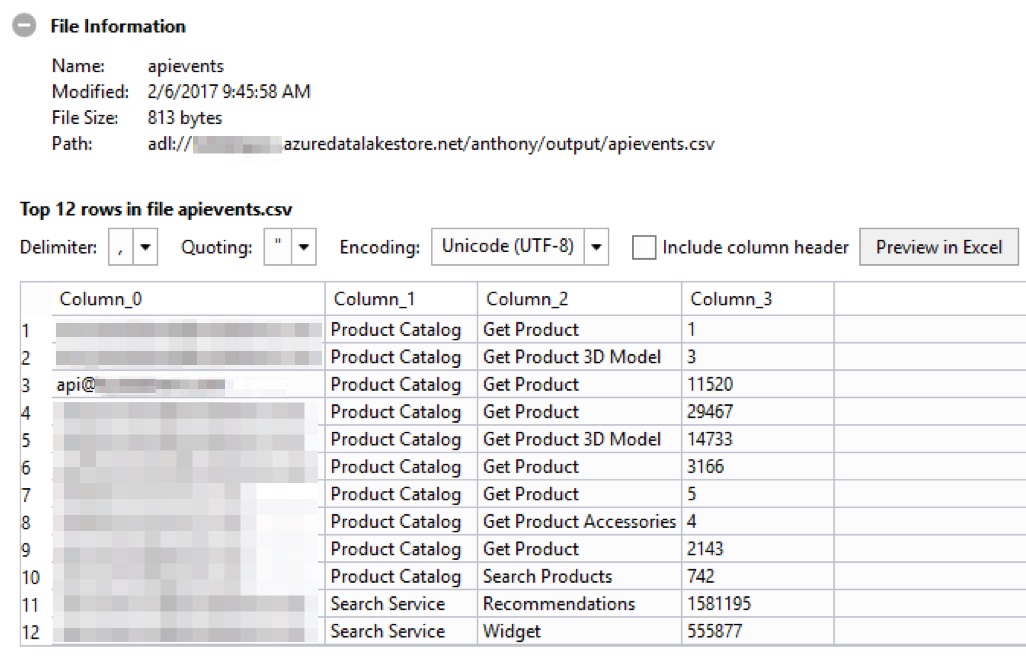

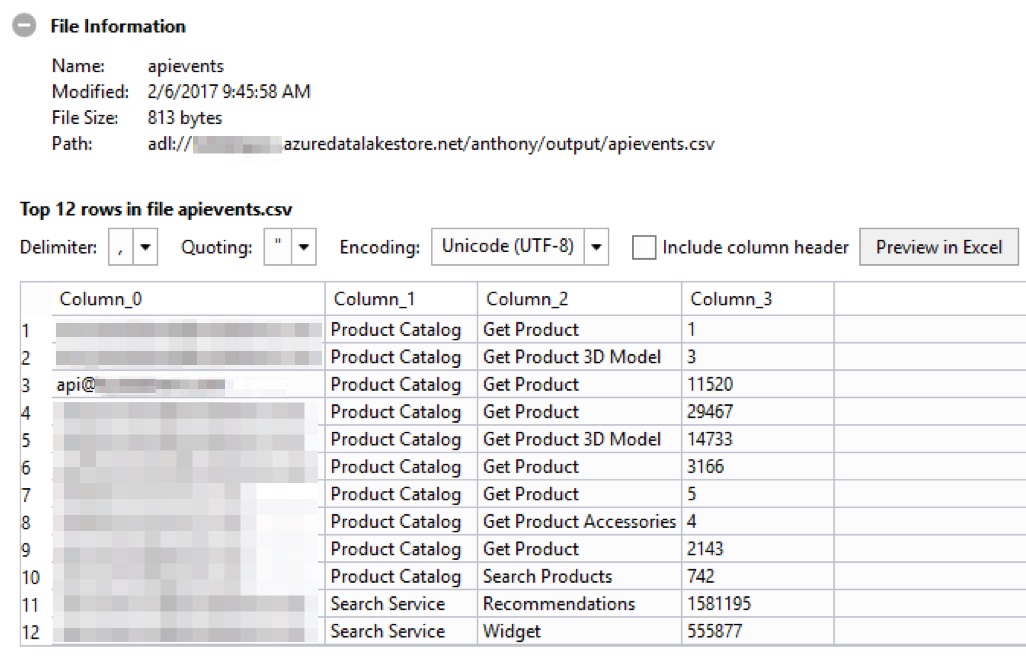

And here's the file it produced, listing API usage by user:

Azure API Management comes with a rich set of built-in analytics to gain insight into the APIs' activities, such as usage by user, APIs, operations, and geography. But there are times when we want to perform custom queries over the activities. For this, API Management allows us to log API activities to Event Hubs.

Once the logs are flowing through Event Hubs, there are many ways we can use the data. For instance, we can save the logs into a Table Storage using Azure Functions and query it with Power BI; or we can process the live stream using Stream Analytics.

Today, we'll look at how to use Event Hubs Archive to automatically save the logs into Blob Storage. And we'll query the logs from Blob Storage using Data Lake Analytics.

I've already written an article with details on how to turn on Event Hubs Archive and query it using U-SQL with Data Lake Analytics. I won't repeat some of those details; instead I'll focus on how to use these tools with Azure API Management.

API Management Log to Event Hubs Policy

To log API activities to Event Hubs from API Management, we'll need to set up a Log to Event Hubs policy.

The first thing we need to do is to create an Event Hub and a logger in our API Management instance. Currently we can only create a logger with the API Management management REST API. Once the logger is set up, we'll set up a Log to Event Hubs policy. The full details on how to do this can be found in their documentation.

Here's an example of my policy where I log some information out as JSON:

Event Hubs Archive

Event Hubs Archive allows us to automatically persist messages in an Event Hub into Blob Storage. We can turn it on in the Properties blade of the Event Hub.

For more information on how to set that up, check out my previous article.

Data Lake Analytics

Event Hubs Archive saves messages in Avro format. To set up Data Lake Analytics to extract Avro and to connect to the Storage account, once again refer to my previous article.

After Data Lake Analytics is set up, we can now write some U-SQL jobs to process our API Management logs.

Here is a U-SQL job to count up how many times each product in a product catalog API is accessed in a day:

REFERENCE ASSEMBLY [Newtonsoft.Json];

REFERENCE ASSEMBLY [Microsoft.Hadoop.Avro];

REFERENCE ASSEMBLY [Microsoft.Analytics.Samples.Formats];

USING Microsoft.Analytics.Samples.Formats.Avro;

USING Microsoft.Analytics.Samples.Formats.Json;

USING System.Text;

USING USQLApplication1;

@eventHubArchiveRecords =

EXTRACT Body byte[],

date DateTime

FROM @"wasb://eventhubarchive@myapievents.blob.core.windows.net/my-eventhub/api-events/{*}/{date:yyyy}/{date:MM}/{date:dd}/{date:HH}/{date:mm}/{*}"

USING new AvroExtractor(@"

{

""type"":""record"",

""name"":""EventData"",

""namespace"":""Microsoft.ServiceBus.Messaging"",

""fields"":[

{""name"":""SequenceNumber"",""type"":""long""},

{""name"":""Offset"",""type"":""string""},

{""name"":""EnqueuedTimeUtc"",""type"":""string""},

{""name"":""SystemProperties"",""type"":{""type"":""map"",""values"":[""long"",""double"",""string"",""bytes""]}},

{""name"":""Properties"",""type"":{""type"":""map"",""values"":[""long"",""double"",""string"",""bytes""]}},

{""name"":""Body"",""type"":[""null"",""bytes""]}

]

}

");

@jsonLogs =

SELECT JsonFunctions.JsonTuple(Encoding.UTF8.GetString(Body), "..*") AS json

FROM @eventHubArchiveRecords

WHERE date >= new DateTime(2017, 2, 9) AND date < new DateTime(2017, 2, 10);

@productIds =

SELECT Udfs.GetProductIdFromUrl(json["requestOriginalUrl"]) AS productId

FROM @jsonLogs

WHERE json["apiName"] == "Product Catalog"

&& json["operationName"] == "Get Product";

@productCounts =

SELECT productId,

COUNT(*) AS count

FROM @productIds

WHERE productId != null

GROUP BY productId;

OUTPUT @productCounts

TO "/anthony/output/apievents-productcounts.csv"

ORDER BY count

USING Outputters.Csv();

We're using a C# user defined function to parse the product id from the API URL:

using System.Text.RegularExpressions;

namespace USQLApplication1

{

public static class Udfs

{

public static string GetProductIdFromUrl(string url)

{

var match = Regex.Match(url, @"/products/(\d{8})$");

return match.Success ? match.Groups[1].Value : null;

}

}

}

This job processed 1152 files in a few minutes:

Here's another job that processed over 4600 larger files containing over 2 million log entries. It used 5.6 hours of compute time; but thanks to parallelism, it completed in under 13 minutes!

And here's the file it produced, listing API usage by user: