Versioning a REST API in Kubernetes with NGINX Ingress Controller

Tuesday, April 11, 2017

An ingress makes it easy to route traffic entering a Kubernetes cluster through a load balancer like NGINX. Beyond basic load balancing and TLS termination, an ingress can have rules for routing to different backends based on paths. The NGINX ingress controller also allows more advanced configurations such as URL rewrites.

In this post, we'll use ingress rules and URL rewrites to route traffic between two versions of a REST API. Each version is deployed as a service (api-version1 and api-version2). We will route traffic with path /api/v1 to api-version1, and /api/v2 to api-version2.

The sample application

We'll be using a super simple ASP.NET Core application. Here's the Configure() method in Startup.cs:

public void Configure(IApplicationBuilder app, IHostingEnvironment env, ILoggerFactory loggerFactory)

{

loggerFactory.AddConsole();

if (env.IsDevelopment())

{

app.UseDeveloperExceptionPage();

}

var version = 1;

app.Map("/api/info", a =>

a.Run(async context =>

{

context.Response.ContentType = "application/json";

await context.Response.WriteAsync(JsonConvert.SerializeObject(

new

{

version = version,

machineName = Environment.MachineName

}

));

})

);

app.Run(async context =>

{

context.Response.StatusCode = 404;

await context.Response.WriteAsync(

$"Nothing found at {context.Request.Path} {Environment.MachineName} (version {version})");

});

}

Our "API" only has one route at /api/info. Everything else returns a 404 page.

We will create a docker image with the version set to 1, and a second docker image with it set to 2. We'll push these to Docker Hub. They will represent the 2 different versions of our API.

Deploying the application

For each version of the application, we'll deploy it to our Kubernetes cluster with this template:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: api-version1

spec:

replicas: 2

template:

metadata:

labels:

app: api-version1

spec:

containers:

- name: api-version1

image: anthonychu/demo-kube-ingress-versions:1

imagePullPolicy: Always

ports:

- containerPort: 80

readinessProbe:

httpGet:

path: /api/info

port: 80

initialDelaySeconds: 3

periodSeconds: 3

And we'll also create a service for each:

apiVersion: v1

kind: Service

metadata:

name: version1-service

spec:

selector:

app: api-version1

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

The service has type of ClusterIP, which means it's only reachable within the cluster. It'll be up to the NGINX ingress controller to expose it to the outside world.

The ingress resource and the NGINX ingress controller

The next thing we need to create is an ingress resource:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: api

spec:

rules:

- http:

paths:

- path: /api/v1/

backend:

serviceName: version1-service

servicePort: 80

- path: /api/v2/

backend:

serviceName: version2-service

servicePort: 80

This defines the rules for the ingress. Here, we have 2 rules that route to each backend service based on paths.

By itself, the ingress resource doesn't do anything; it needs an ingress controller. This template creates a replication controller for an NGINX ingress controller:

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx-ingress-rc

labels:

app: nginx-ingress

spec:

replicas: 1

selector:

app: nginx-ingress

template:

metadata:

labels:

app: nginx-ingress

spec:

containers:

- image: nginxdemos/nginx-ingress:0.7.0

imagePullPolicy: Always

name: nginx-ingress

ports:

- containerPort: 80

hostPort: 80

- containerPort: 443

hostPort: 443

Expose NGINX to external traffic

The ingress is set up at this point. We need to make it externally accessible. We can do this by setting up a LoadBalancer service:

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx-ingress

ports:

- protocol: TCP

port: 80

targetPort: 80

name: http

- protocol: TCP

port: 443

targetPort: 443

name: https

type: LoadBalancer

In Azure Container Service, this will expose the service using an Azure Load Balancer. It will take a couple of minutes before it's available. To get the external IP, run kubectl get services:

$ kubectl get services

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-service 10.0.184.73 23.99.81.249 80/TCP,443/TCP 1d

version1-service 10.0.105.162 <none> 80/TCP 1d

version2-service 10.0.203.9 <none> 80/TCP 1d

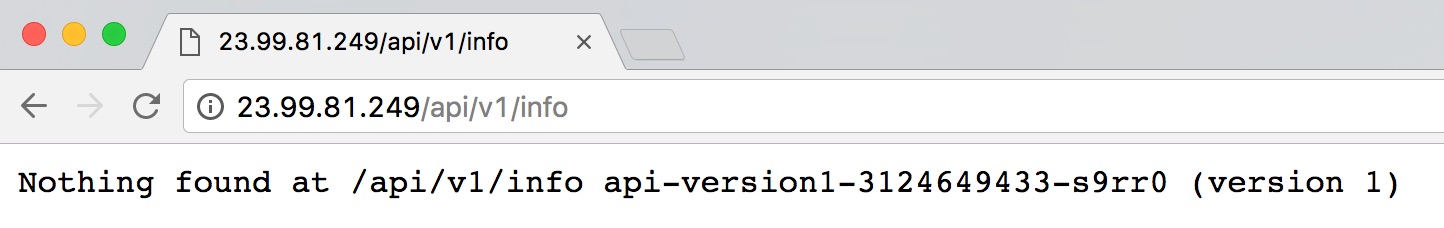

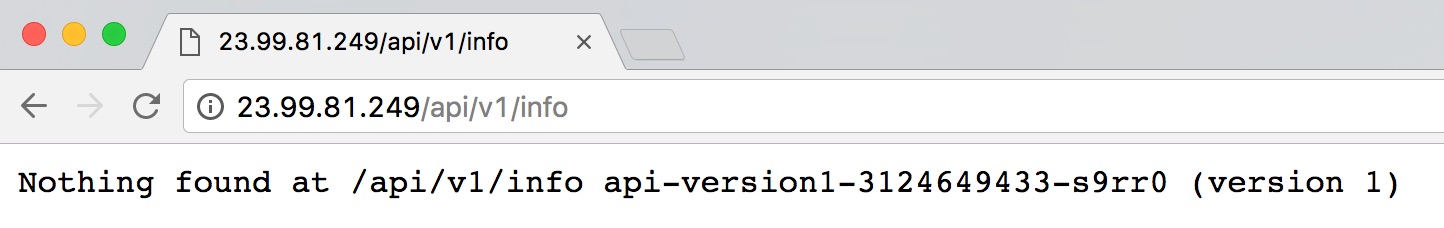

Once the load balancer is set up, we can try out the service. We would expect /api/v1/info to route to /api/info on version 1 of our backend service:

It's not quite working as we're getting the 404 page. If we refresh the page, we should see that we're hitting different containers running version 1; so at least the load balancing is working.

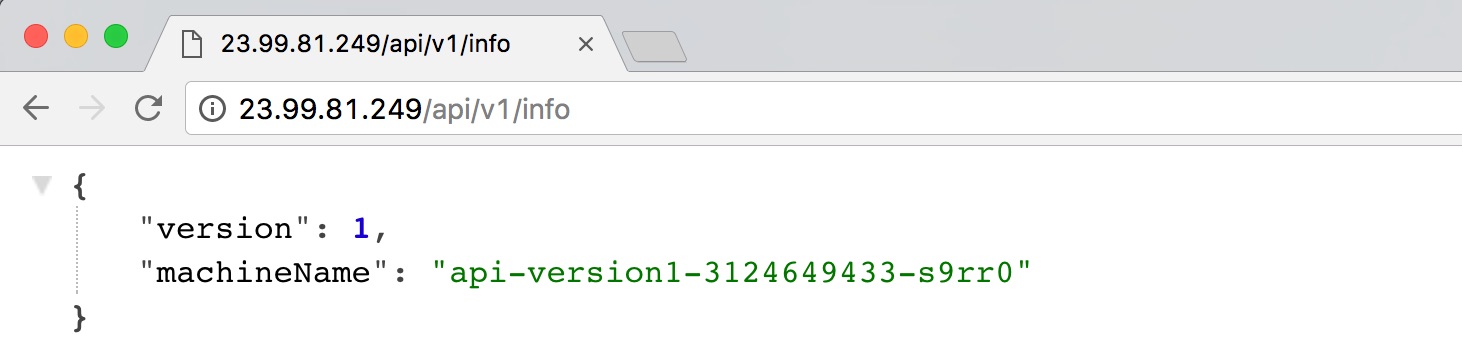

URL rewrites

As we can see in the screenshot above, /api/v1/info is actually being routed to /api/v1/info on our backend service. We'll need to create a rewrite rule to strip out the /v1 from the path. For the NGINX ingress, we do this via an annotation on the ingress resource. Here's the ingress with the annotation added.

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: api

annotations:

nginx.org/rewrites: "serviceName=version1-service rewrite=/api/;serviceName=version2-service rewrite=/api/"

spec:

rules:

- http:

paths:

- path: /api/v1/

backend:

serviceName: version1-service

servicePort: 80

- path: /api/v2/

backend:

serviceName: version2-service

servicePort: 80

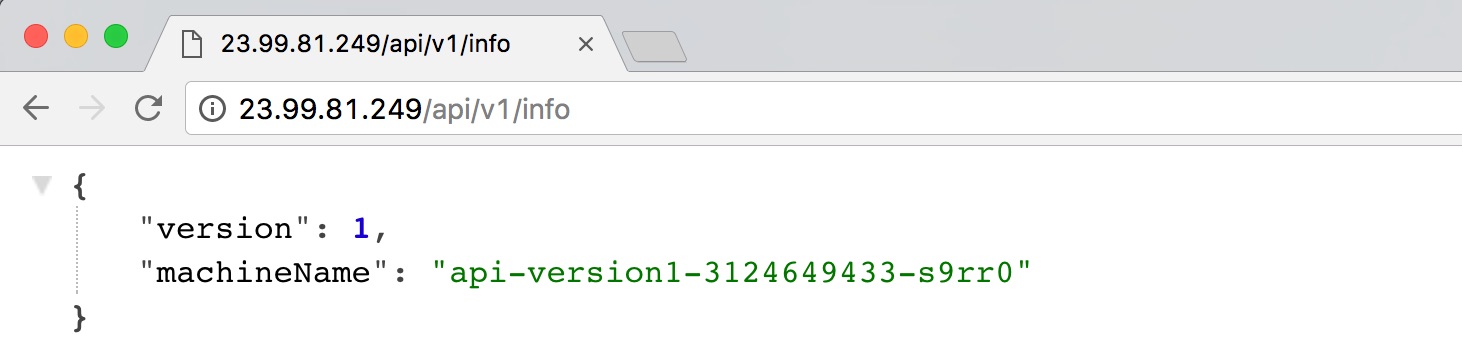

Now if we hit /api/v1/info, we should be routed to the correct endpoint on version 1:

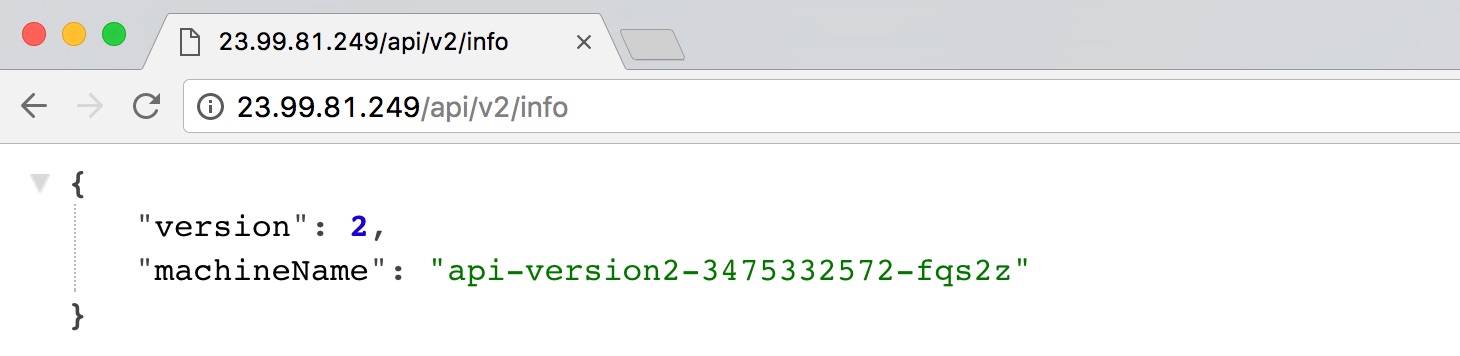

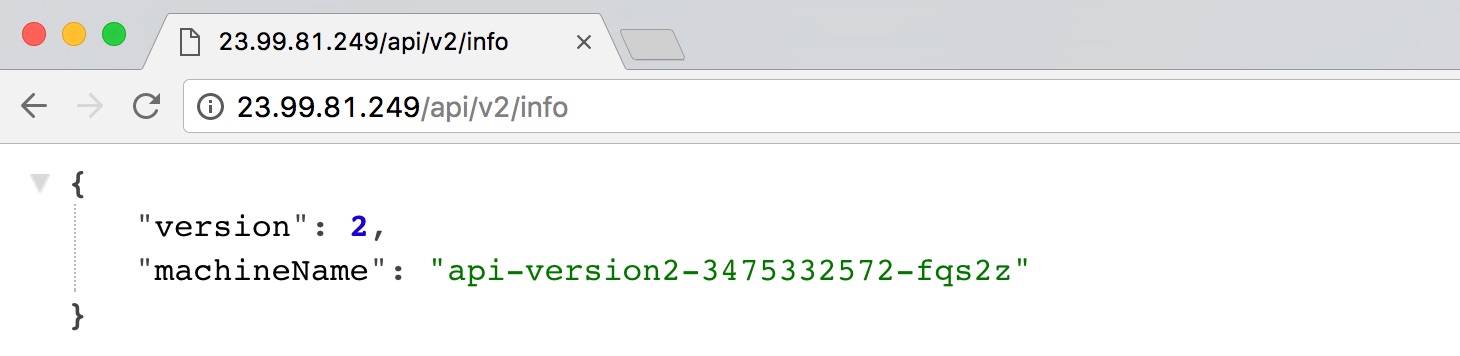

And /api/v2/info gives us version 2:

Source code

An ingress makes it easy to route traffic entering a Kubernetes cluster through a load balancer like NGINX. Beyond basic load balancing and TLS termination, an ingress can have rules for routing to different backends based on paths. The NGINX ingress controller also allows more advanced configurations such as URL rewrites.

In this post, we'll use ingress rules and URL rewrites to route traffic between two versions of a REST API. Each version is deployed as a service (api-version1 and api-version2). We will route traffic with path /api/v1 to api-version1, and /api/v2 to api-version2.

The sample application

We'll be using a super simple ASP.NET Core application. Here's the Configure() method in Startup.cs:

public void Configure(IApplicationBuilder app, IHostingEnvironment env, ILoggerFactory loggerFactory)

{

loggerFactory.AddConsole();

if (env.IsDevelopment())

{

app.UseDeveloperExceptionPage();

}

var version = 1;

app.Map("/api/info", a =>

a.Run(async context =>

{

context.Response.ContentType = "application/json";

await context.Response.WriteAsync(JsonConvert.SerializeObject(

new

{

version = version,

machineName = Environment.MachineName

}

));

})

);

app.Run(async context =>

{

context.Response.StatusCode = 404;

await context.Response.WriteAsync(

$"Nothing found at {context.Request.Path} {Environment.MachineName} (version {version})");

});

}

Our "API" only has one route at /api/info. Everything else returns a 404 page.

We will create a docker image with the version set to 1, and a second docker image with it set to 2. We'll push these to Docker Hub. They will represent the 2 different versions of our API.

Deploying the application

For each version of the application, we'll deploy it to our Kubernetes cluster with this template:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: api-version1

spec:

replicas: 2

template:

metadata:

labels:

app: api-version1

spec:

containers:

- name: api-version1

image: anthonychu/demo-kube-ingress-versions:1

imagePullPolicy: Always

ports:

- containerPort: 80

readinessProbe:

httpGet:

path: /api/info

port: 80

initialDelaySeconds: 3

periodSeconds: 3

And we'll also create a service for each:

apiVersion: v1

kind: Service

metadata:

name: version1-service

spec:

selector:

app: api-version1

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

The service has type of ClusterIP, which means it's only reachable within the cluster. It'll be up to the NGINX ingress controller to expose it to the outside world.

The ingress resource and the NGINX ingress controller

The next thing we need to create is an ingress resource:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: api

spec:

rules:

- http:

paths:

- path: /api/v1/

backend:

serviceName: version1-service

servicePort: 80

- path: /api/v2/

backend:

serviceName: version2-service

servicePort: 80

This defines the rules for the ingress. Here, we have 2 rules that route to each backend service based on paths.

By itself, the ingress resource doesn't do anything; it needs an ingress controller. This template creates a replication controller for an NGINX ingress controller:

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx-ingress-rc

labels:

app: nginx-ingress

spec:

replicas: 1

selector:

app: nginx-ingress

template:

metadata:

labels:

app: nginx-ingress

spec:

containers:

- image: nginxdemos/nginx-ingress:0.7.0

imagePullPolicy: Always

name: nginx-ingress

ports:

- containerPort: 80

hostPort: 80

- containerPort: 443

hostPort: 443

Expose NGINX to external traffic

The ingress is set up at this point. We need to make it externally accessible. We can do this by setting up a LoadBalancer service:

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx-ingress

ports:

- protocol: TCP

port: 80

targetPort: 80

name: http

- protocol: TCP

port: 443

targetPort: 443

name: https

type: LoadBalancer

In Azure Container Service, this will expose the service using an Azure Load Balancer. It will take a couple of minutes before it's available. To get the external IP, run kubectl get services:

$ kubectl get services

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-service 10.0.184.73 23.99.81.249 80/TCP,443/TCP 1d

version1-service 10.0.105.162 <none> 80/TCP 1d

version2-service 10.0.203.9 <none> 80/TCP 1d

Once the load balancer is set up, we can try out the service. We would expect /api/v1/info to route to /api/info on version 1 of our backend service:

It's not quite working as we're getting the 404 page. If we refresh the page, we should see that we're hitting different containers running version 1; so at least the load balancing is working.

URL rewrites

As we can see in the screenshot above, /api/v1/info is actually being routed to /api/v1/info on our backend service. We'll need to create a rewrite rule to strip out the /v1 from the path. For the NGINX ingress, we do this via an annotation on the ingress resource. Here's the ingress with the annotation added.

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: api

annotations:

nginx.org/rewrites: "serviceName=version1-service rewrite=/api/;serviceName=version2-service rewrite=/api/"

spec:

rules:

- http:

paths:

- path: /api/v1/

backend:

serviceName: version1-service

servicePort: 80

- path: /api/v2/

backend:

serviceName: version2-service

servicePort: 80

Now if we hit /api/v1/info, we should be routed to the correct endpoint on version 1:

And /api/v2/info gives us version 2: